Is Artificial Intelligence capable of substantial support to human thinking?

Cognitive computing is not an IBM “fraud”!

In February, a couple of weeks ago, Dr. Roger Schank castigated IBM. Accusing IBM of “fraud”, Dr Schank asserted “they are not doing “cognitive computing” no matter how many times they say they are”.

Dr Schank has been CEO of Socratic Arts since 2002, is a prolific publisher of articles and books, and formerly holding positions including Professor of Computer Science and Psychology at Yale University and Director of the Yale Artificial Intelligence Project (1974–1989), a visiting professor at the University of Paris VII, Assistant Professor of Linguistics and Computer Science at Stanford University (1968–1973), and research fellow at the Institute for Semantics and Cognition in Switzerland.

What attracted Dr Schank’s ire was a proclamation from an IBM Vice President of Marketing, Ann Rubin, that IBM’s Watson AI platform could “outthink” human brains in areas where finding insights and connections can be difficult due to the abundance of data.

“You can outthink cancer, outthink risk, outthink doubt, outthink competitors if you embrace this idea of cognitive computing,” she apparently said.

Clearly attempting to out-Musk the wildly optimistic predictions of wires embedded in a pig’s brain and interacting with a “connected” microchip being the cusp of the great “Singularity”, Ms Rubin may be tempted to predict a colony of one thousand baby Watson’s colonising Mars by Christmas 2026. Nevertheless, she runs IBM’s corporate advertising program and can be forgiven for doing what Marketing people are supposed to do.

Computers that “think”??? Oh well, OK then.

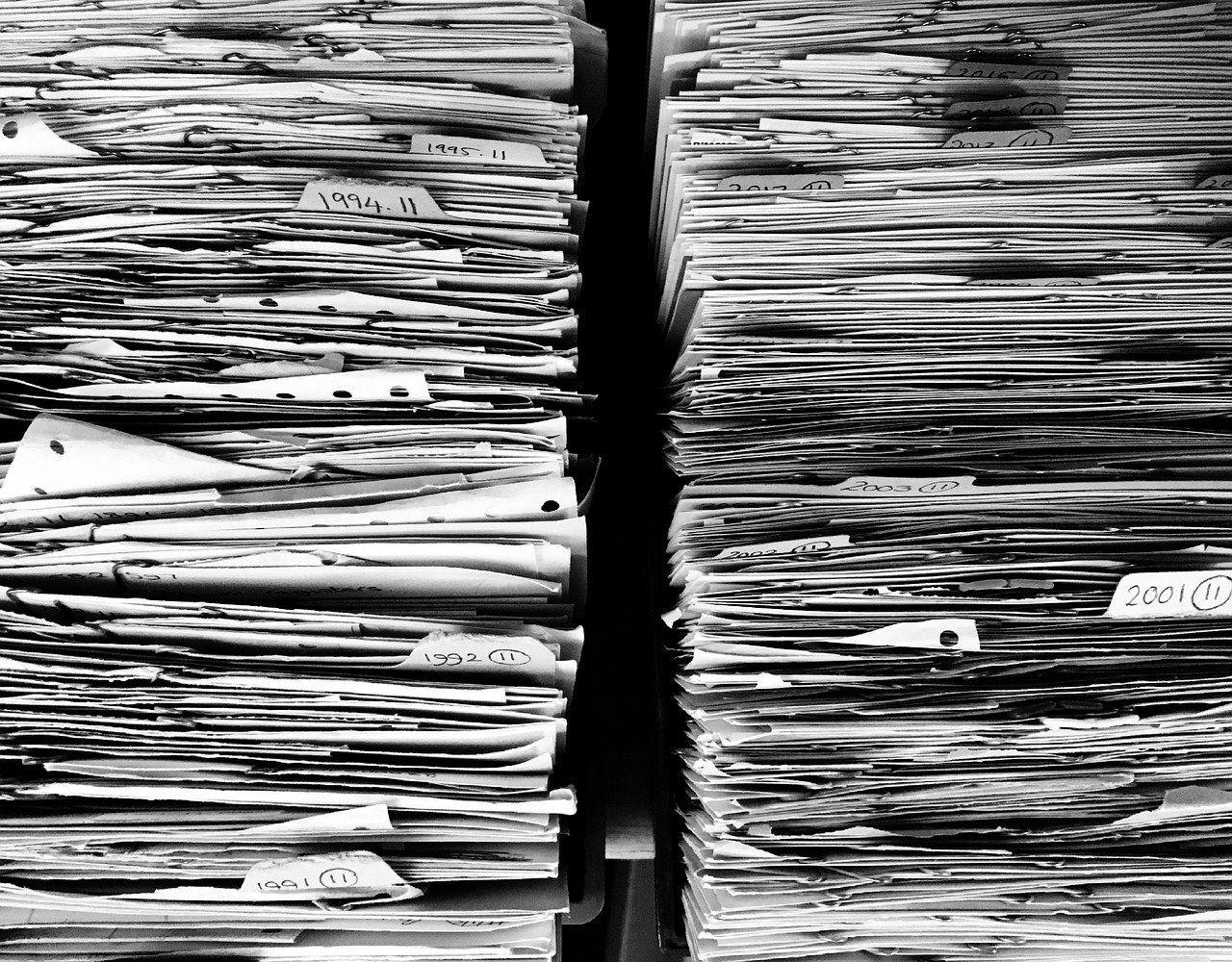

Soon after the COVID-19 outbreak, we approached a University epidemiologist to offer help with our Artificial Intelligence know-how and our “cognitiveAI”, platform. After a couple of chats with an eminent Professor and epidemiologist, the challenge we set ourselves was to build a system that could read 75,000 coronavirus research papers stored and distributed by the “Semantic Scholar” medical research database and search engine.

We had learned that researchers look to prior research for clues when pursuing some new hypothesis.

Clearly no human researcher can read 75,000 research papers and remember everything in them; just reading them would take a year reading 192 papers every day. Nor could a human remember the content of 70,000 research papers and then ‘join the dots’ between all that content to find clues to propose or support a new hypothesis.

Over the next five months we allocated around 100 person days to see what we could do with an artificial intelligence system, built from scratch.

For the nerds amongst us, a quick overview of the technical stuff….

“Betsy”, as we call our coronavirus AI system, was provided with 3000 coronavirus research papers that we downloaded from Semantic Scholar. Betsy reads each sentence in each paper and uses Neural Networks, Semantic Computing and proprietary algorithms to extract “relevant clauses and words”, what we call “facts”. The extracted facts are represented in “RDF triples”, in subject-predicate-object form.

For example, the part-sentence, “the S protein can induce protective immunity against viral infection” is transformed into the subject-predicate-object forms in two triples, “the S protein — induce — protective immunity” and “protective immunity-against-viral infection”. Epidemiologists annotate some “facts” with a concept, thus training the neural network to associate facts with concepts, enabling a “semantic layer” above the raw text.

Facts can be related to a concept (by a predicate), to another fact by virtue of appearing in the same sentence, and so on for every sentence in the paper. A large number of RDF triples can therefore be generated from each research paper highlighting the key concepts and related facts being discussed in the paper. These triples form a sophisticated data structure called a “Semantic Graph”, that is enriched as more facts are added from more research papers.

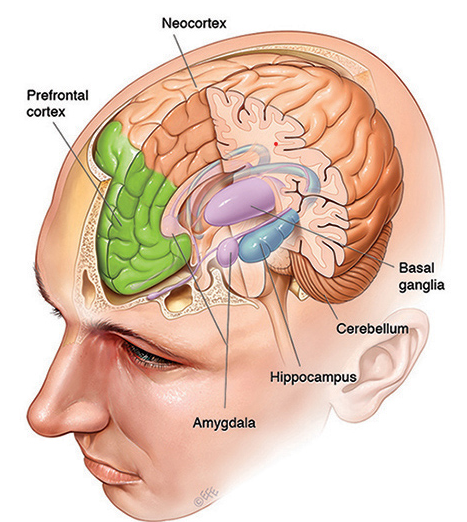

A Semantic Graph possibly mimics the semantic memory occurring in the human brain’s neocortex, where neuroscientists suggest that semantic memory is “a type of long-term memory involving the capacity to recall words, concepts, or numbers, which is essential for the use and understanding of language”.

A putative ontology, also stored in the Semantic Graph, can be semi-automatically generated from the triples to “join the dots” within and between papers. This process can be performed on 700,000 research papers as easily as it can be performed on 700 and provides a rich description of each research paper encoded for a computer to understand, by itself.

Ranking can be applied to highlight the papers of highest relevance. SPARQL queries can quickly find related papers to correspond with a researcher’s domain of interest and represented graphically using Visualizers. Betsy also supports queries expressed in natural language for query and answer of the Semantic Graph, maybe mimicking a human at a very basic level, but with a massive amount of well-ordered data at Betsy’s immediate disposal.

In computer architecture, a bus is a communication system that transfers data between components inside a computer, and covers all related hardware and software components.

In the human brain and nervous system, “data” is moved around the brain and the body by 100 billion specialized cells called “neurons”. The interaction of the nervous system, the brain neurons, and the Hippocampus is thought to be of utmost importance in memory formation.

In the human brain, knowledge is encoded in memory cells by a combination of cell biology, biochemistry and electrical pulses, but we don’t know how. Obviously that encoding is independent of spoken language so that you learn in one language and the learning is transportable to another language for multilingual people, but we don’t know what the mechanism is for that in human brains.

Interestingly, “neuroplastic brain changes”, including increased grey matter density, have been found in people with skills in more than one language, from children and young adults through to the elderly. In a computer’s semantic graph, knowledge is encoded in the RDF-triple data model that is also independent of the data format that it may be acquired from or published in. In both cases some kind of transformation occurs.

Computer reasoning can be applied to the triples to infer relationships between concepts and facts in different research papers. Here the computer activity of the processors and RAM possibly mimics the working memory that “occurs in the prefrontal cortex of the brain and is a type of short-term memory that facilitates planning, comprehension, reasoning, and problem-solving.

The prefrontal cortex is the most recent addition to the mammalian brain and has often been connected or related to intelligence and learning of humans”. Human experts can then consider, accept or ignore the computer-generated inferences in a human-machine partnership of discovery.

That’s enough now nerds!

For the University demonstrations, we compared Betsy to the University’s in-house built search engine and the PubMed search engine. The latter, PubMed, is the most popular search engine for medical research and is provided by the US National Library of Medicine within the US National Institutes of Health.

Success: We copied and pasted a small number of sentences from a research paper that all three search engines had stored in their databases. PubMed did not find any matching research papers, not even the original one we copied the sentences from. The University search engine found only the original paper.

Betsy found 25 research papers with high correlation, including the original, that would be of high interest to a researcher interest in the content of the searched for sentences.

This was a spectacular success for us.

We are now reaching out to US Universities to partner in a commercial product development for Academic and Industry research.

In plain non-technical language, what is indisputable is that a computer can, at thousands of times the speed of a human read a digital document or a database at astonishing speed compared to a human

extract relevant phrases, words, and concepts (with some human training) remember everything it reads and extracts using semantic computing techniques, establish and record relationships in the extracted information using computer reasoning techniques, create relationships or infer them for humans to consider.

In the above, the computer out-paces any human researcher; a human simply cannot compete with the speed of a computer for some important tasks.

But “thinking” Ms Rubin? A computer can out-think a human? Pigs might fly to Mars as well. Maybe if you are in IBM’s marketing department, but not if you are a human neurologist, or any other type of neuroscience expert, or Dr Schank.

While intelligence and thinking are linked to the poorly understood power of human reasoning, computers are currently limited to Semantic Graphs ("that link “facts” to represent “knowledge”) and logical reasoning, both being tightly bound by mathematics; very useful, but also currently limited.

In contrast, Humans have no such limitations. Adult humans have very much superior reasoning based on experience, intuition, imagination, and emotion, for example. (Try telling that to a teenager.)

Just two years ago many “experts” referred to “Machine Learning” and “AI” interchangeably, implying that Machine Learning enabled computer intelligence. Today, we distinguish between the two.

The not-so-good news is that Machine Learning techniques such as Neural Networks are clever rather than intelligent, limited to pattern recognition and largely dependent on the human intelligence involved in making and supervising them Artificial Intelligence techniques such as Semantic Graphs and computer reasoning are still at a very basic stage of mimicking human intelligence.

The good news is that these resource hungry techniques are better supported by faster infrastructure solutions, such as solid-state memory and cloud computing.

The rate of understanding Semantic Graphs and computer reasoning is now increasing quickly, and getting much greater attention now than it has in the last twenty years. As a result, the potential of expanding the functionality of AI techniques such as Semantic Graphs and RDF is growing rapidly.

Artificial Intelligence is not a technique; it is a collection of techniques and as the functionality of each one is extended it will complement and extend the others.

Is Artificial Intelligence real?

Yes, in the last five years there has been more progress that in the previous 70 years. Using the right mix of technologies computers can exhibit characteristics that mimic human brain abilities at an elementary level. A surprising number of animals also exhibit characteristics that mimic human brain abilities at an elementary level. [See here] A baby IBEX can climb a vertical rock face from birth, a baby human can drink milk.

So, is Artificial Intelligence equivalent to or close to Human intelligence?

Definitely not.

Is the combination of Semantic Graphs and computer reasoning equivalent to or close to human thinking?

Definitely not.

Why is Artificial Intelligence so elusive?

Most experts think of AI in terms of mimicking the human brain, which took around seven million years to develop to its current level from, well, not much. We’ve been trying to copy it for a mere 70 years, but the more we know about the brain, the more we are in awe of it. Building a computer that fully mimics a human brain may be impossible.

Can computers outperform humans in finding hidden “insights and connections where there is an abundance of data”?

Absolutely, where there is massive amounts of data, such as in medical research, a computer can find hidden information that humans, even supported by powerful traditional analytics tools, have no hope of finding.

Is Artificial Intelligence capable of substantial support to human thinking?

Most definitely, and it is now getting better very quickly, as our Betsy can demonstrate.

Is Watson capable of cognitive computing, implying that they are thoughtful?

It’s really a matter of semantics!

Dr Schank’s article can be found here.